We’re currently updating the Digital Service Standard. Our aim is to move from looking at isolated transactions to supporting whole, end-to-end services. Services as users understand them. This reflects a commitment in the Government Transformation Strategy to design and deliver joined-up services.

The new standard will be used by a wide range of civil servants across different departments and professions to support them to build better services. This meant that while we were updating the standard we had to ensure we spoke to as wide a range of people as possible, to make sure that the standard would support them and the way that they work.

The standard is not something that’s written by GDS and then sent out across government. It’s written in a collaborative way with many users. This meant our user research had to be as collaborative as possible.

Here’s how we conducted wide-ranging and collaborative user research into the Service Standard.

How we did the research

A series of interviews

We started out with a series of interviews – both individual interviews and group interviews. In total we spoke to more than 20 people inside and outside GDS. We spoke to people working on citizen-facing services, as well as people working on assurance and assessment teams, and people working on internal services and transformation teams.

We spoke to people who are Heads of Community and experts in a wide range of areas, including design, performance, technology, security, delivery, operations and digital.

The interviews focused on people’s experience using and meeting the current Digital Service Standard and their thoughts on how teams can be better supported to deliver end-to-end services.

Large-scale data analysis

The interviews generated a lot of data. It made sense to get to grips with this data through group analysis, with GDS colleagues from across the Service Design and Standards programme. The Government as a Platform team took a similar approach in their research to work out what components to look at next.

Based on this analysis, we developed some ideas on how to take the standard forward and challenges that government needs the standard to meet.

Consultation workshops

Following the interviews, we wanted to engage with a lot more people, and a wider variety of people, who are delivering and assuring digital services and leading digital transformation across government.

We decided to do this through 4 workshops. These took place across the country – in London, Sheffield, Newport and Newcastle – and involved 150 people from different disciplines.

For each of the workshops, we ran 2 exercises.

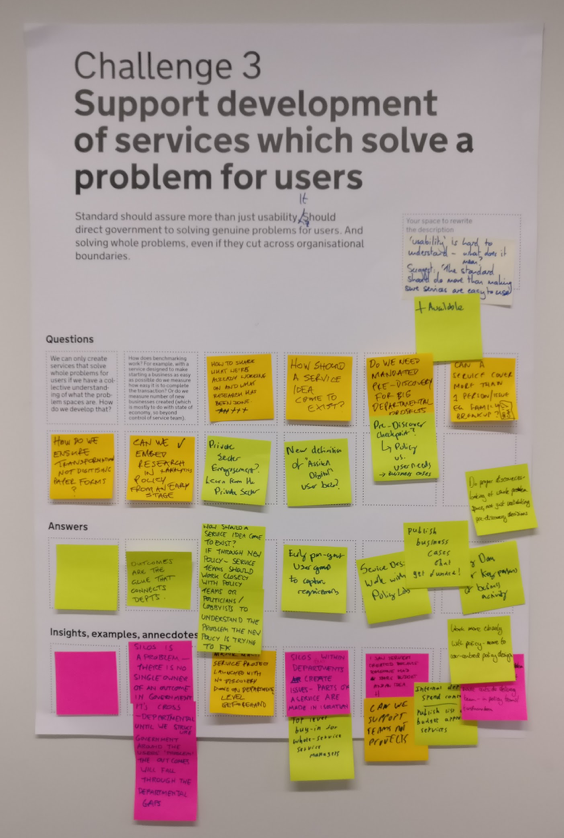

Consultation workshop exercise 1: 12 challenges

Following the interview stage of the research, we had identified 12 challenges that the new standard needs to meet. We wanted to get people’s feedback on these challenges.

This meant we needed a way for lots of people to give feedback on 12 different things at the same time. We decided the best method to use was small group exercises.

We organised the small group work by asking people to select what challenges they were most interested in working on. We then asked them to work through each challenge, one at a time, in groups of 4-5 people.

We gave the groups 15 minutes for each challenge and we had time for 6 or 7 rotations, so each group was able to look at about half the challenges.

To gather feedback, we created 12 posters for people to contribute to. We asked people to tell us:

- Does the challenge need to be changed?

- What questions does the challenge raise?

- Do you have any answers to the questions?

- Do you have any anecdotes about the questions and answers?

We designed the posters in a way that would encourage people to add Post-its to them. The squares represent placeholders for Post-its and we also had some examples already printed on the poster.

Consultation workshop exercise 2: identifying themes

After getting feedback on the challenges, we then ran a show and tell.

We asked people to stand by the challenge they were most interested in or had worked on last. One or two people per group were nominated to present a summary of the questions, answers and insights for the challenge to the rest of the group.

One or two facilitators at each workshop captured these summaries on Post-it notes and did on-the-fly affinity diagramming to visualise the emerging themes.

We presented the findings of this quick-and-dirty affinity diagramming back to the group to stimulate the final discussion and wrap-up of the workshop.

After the first workshop the affinity diagramming was refined and added to each subsequent workshop, although the themes remained consistent across the 4 workshops.

For those who weren’t able to attend one of the workshop we made the challenges available in a Google group with the same question/answer/anecdotes format.

How we’re using the data

We did more large-scale group analysis after each of the workshops. Gathering all the data together from the 4 workshops generated 17,000 words.

We used this analysis to feed into the drafting of the standard and what it needs to include to support building whole end-to-end services. We also used the insights to identify what new guidance will be need to support the updated Service Standard.

This insight was added to the Service Manual guidance backlog and we blogged about what we learnt from this research.

More research to come

This isn’t a one-time piece of research, for several reasons.

First, because we haven’t changed the Service Standard in a long time. So we’ll be piloting changes made to the Service Standard through updates to assurance and assessment processes.

We are also doing user research to make sure that new guidance we write for the Service Manual meets user needs.

And we are planning to update the standard on a more regular basis, rather than waiting another 2 years. Each time we make updates we’ll do this in a user-centric way.