During a firebreak earlier in the year, we carried out research to understand how our colleagues at the Government Digital Service (GDS) feel about the programmes they’re working on.

This work was inspired by the annual Civil Service People Survey and a desire to better understand the working experiences of people at GDS. There is also wider evidence that thinking about the experience of staff in this way can improve individual wellbeing as well as the health and productivity of an organisation.

We do user research at GDS with a broad definition of a ‘user’: anyone who uses the outputs of your work. This can include people within your own department and team. However, when carrying out research with colleagues, there are certain issues and sensitivities you need to be aware of.

Doing research with internal participants means certain situations could be easily identifiable and people may not end up being as anonymous as we would like. Here’s how we built on the usual planning process to deal with these potential issues.

Our process

There were certain topics that we knew we wanted to explore, based on the results of the People Survey. But we also wanted to leave the discussions open enough that unanticipated themes could emerge. That’s why we decided to do qualitative, exploratory interviews.

Planning

As we were interviewing colleagues about the intimate details of their working lives, we wanted to be as clear as possible about what participants would be signing up for. So we wrote a detailed information sheet. This included how we planned to store and share data and the limits of confidentiality - which we had checked with HR beforehand.

We looked at the Service Manual for guidance and supplemented it with other, more in depth guidance on ethics.

Recruitment

We wanted to ensure that we’d be hearing a range of experiences, so we checked that our participant list was diverse in:

- disciplines

- mission teams

- seniority

- length of service

- gender

- ethnicity

Interviews

We wanted to ensure that all participants felt comfortable and free to speak openly, so we gave them assurances about privacy and anonymity. We all had existing relationships with most of the participants, so we offered participants a choice of interviewer, location and data-capturing method.

We conducted 10 interviews with people, each lasting for about an hour. All interviews were scheduled in via calendar invites set to ‘private’, so that the details were only visible to the participant and the researcher.

Data handling

We created a shared folder that only the 3 of us could access, which held all the consent, participant details, notes and analysis. Transcripts and recordings were also stored here, but deleted after we’d finished the analysis.

We kept all participants informed throughout the process about how we were handling their data and if anything had changed prior to how we originally anticipated.

Analysis

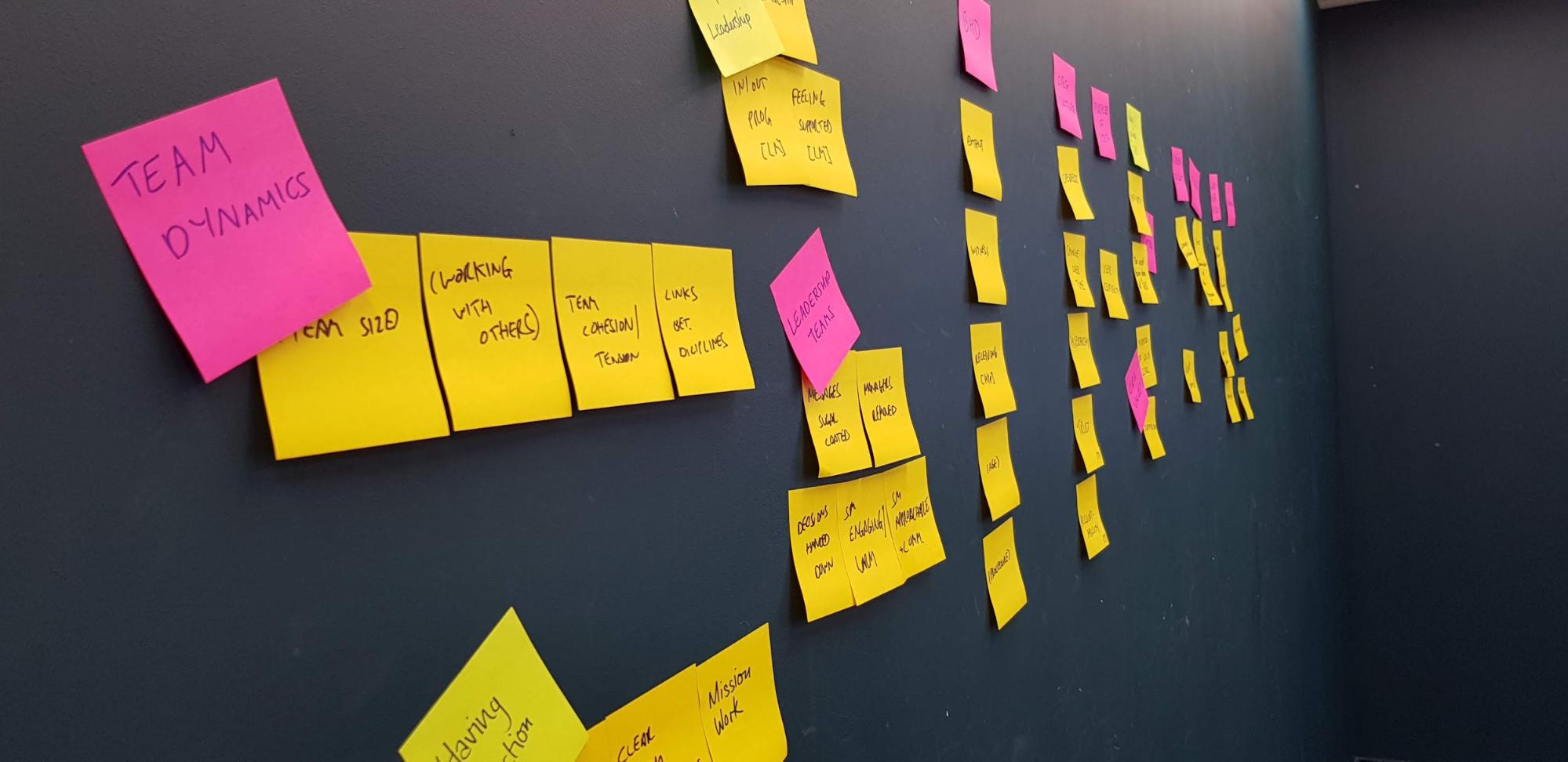

We used some of the topic areas from our discussion guide to frame initial analysis, but also adopted an exploratory approach to allow unanticipated but important themes, to emerge.

We picked out a list of quotes from each transcript and shared this with participants early on to make sure that they were happy for us to include those quotes in the final presentation. We gave them the option to either reword or remove quotes entirely, with no pressure to explain their rationale.

We covered a wide range of issues, which were organised into themes and sub-themes so that we could present a huge amount of qualitative data in a way that created a coherent narrative and was easy to navigate.

Sharing Findings

We were aware that the findings would spark the interest of people across the Cabinet Office and also within GDS.

So we took special precautions to remove anything that might identify interviewees. This was not just a case of taking out names - we also needed to avoid ‘jigsaw identification’. For example, situations where people who worked in unique or specialist roles might be identified through describing their work.

For the same reason, we avoiding describing specific incidents and instead focused on underlying themes.

Involving participants

After completing our analysis we shared our draft report with participants for a final check before sharing more widely. This process improved the accuracy and validity of our analysis. It also meant that participants played an active role and had a positive research experience.

Being reflexive

This research involved a number of issues that made reflexive practice particularly useful, many of them relating to the fact that we were interviewing colleagues and touching on topics that directly affected us.

There are many definitions of reflexivity, but for us it meant the process of turning our focus inwards, to consider the effect we might have had on the research and the effect that the research might have had on us.

We did this by making space to reflect individually and as a group, and acknowledged our own preconceptions. This meant we could minimise the effects of bias whilst also feeling emotionally prepared for the possibility of listening to difficult experiences.

Next steps

We’ve had interest in the work outside of GDS, so we’ve started sharing our methodology further by speaking to relevant contacts in other departments.

By adapting our standard methodologies, this approach has proved successful for conducting research with colleagues on potentially sensitive subjects. It’s an example of being extremely mindful about considering our users in such situations.

Further work is now being done with more teams and within GDS networks to explore the staff experiences in different settings and context.